AI-Powered Helpdesk

On Your Terms

Not AI for the sake of AI.

But meaningful automation where it makes a real difference. With Zammad, you decide on the AI model, the infrastructure, and how your data is handled.

- Less routine work

- Efficiency potential*

- Data sovereignty

- Up to 70%

- 30%+

- 100%

Three Flexible Ways to Run AI in Your Helpdesk

AI should empower your team — not compromise your data. With Zammad, you decide which AI technology is used and where your data is processed.

-

Zammad AI

A ready-to-use AI solution, operated on secure servers in a controlled environment. Benefit from intelligent support with clearly defined data protection standards.

-

Bring Your Own AI

Connect your existing OpenAI or Azure account, or integrate other compatible models. You decide which AI model is used within Zammad.

-

Self-Hosted AI

Connect a local AI instance and process your data exclusively on your own infrastructure — for maximum data sovereignty and control.

"Many AI tools are a black box. Zammad 7.0 is a vault. We enable AI-powered support that meets the strictest European data protection standards by supporting local LLMs (Large Language Models)."

— Martin Edenhofer, Founder & CEO

Human Expertise with AI Power

AI can be amazing — as long as it doesn’t overstep. Zammad AI supports you exactly where daily workflows tend to slow things down.

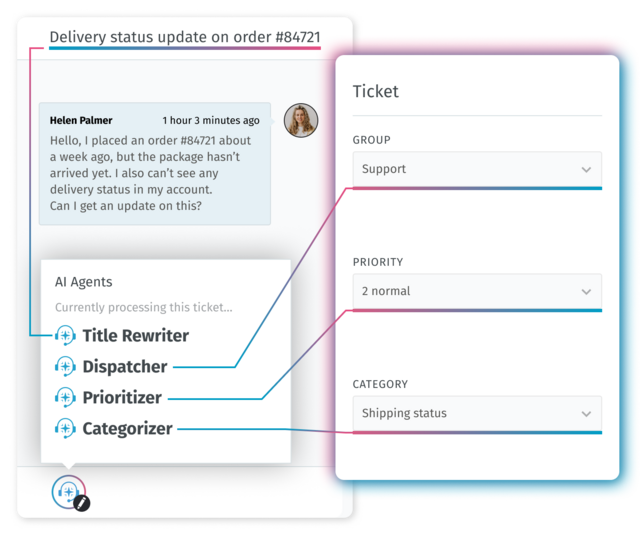

AI Agents

- Automatically detects and populates ticket attributes

- Supports custom and user-defined fields

- Triggers actions such as intelligent ticket routing

- Reduces manual steps and input errors

- Frees up time for what really matters: your customers

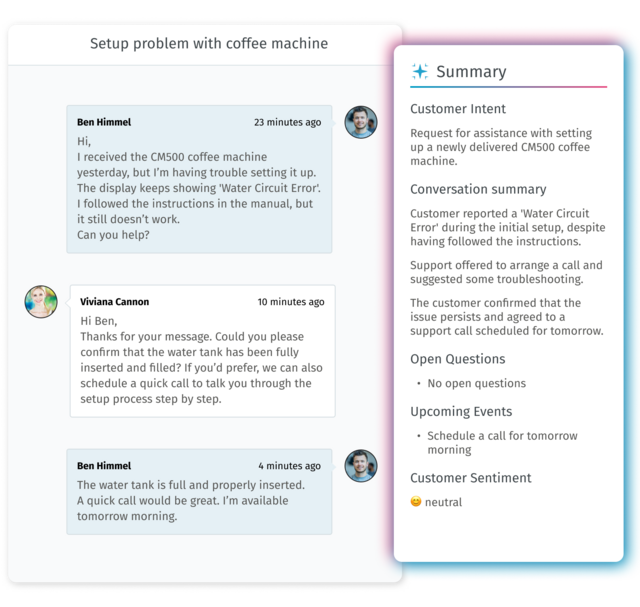

AI Summary

- Extracts the most relevant information automatically

- Turns complex ticket histories into concise overviews

- Highlights important details and turning points

- Analyzes customer sentiment

- Identifies open questions and concrete next steps

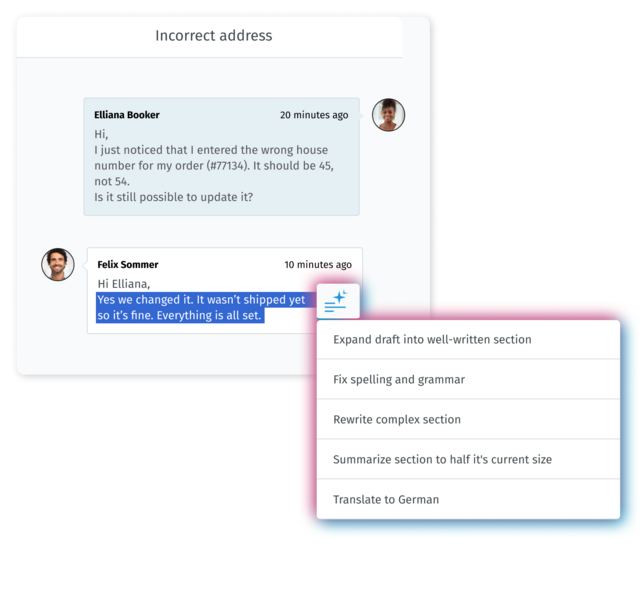

AI Writing Assistant

- Helps craft clear and professional responses

- Improves grammar and spelling

- Ensures a consistent communication style

- Helps meet internal quality standards

- Enables confident customer communication

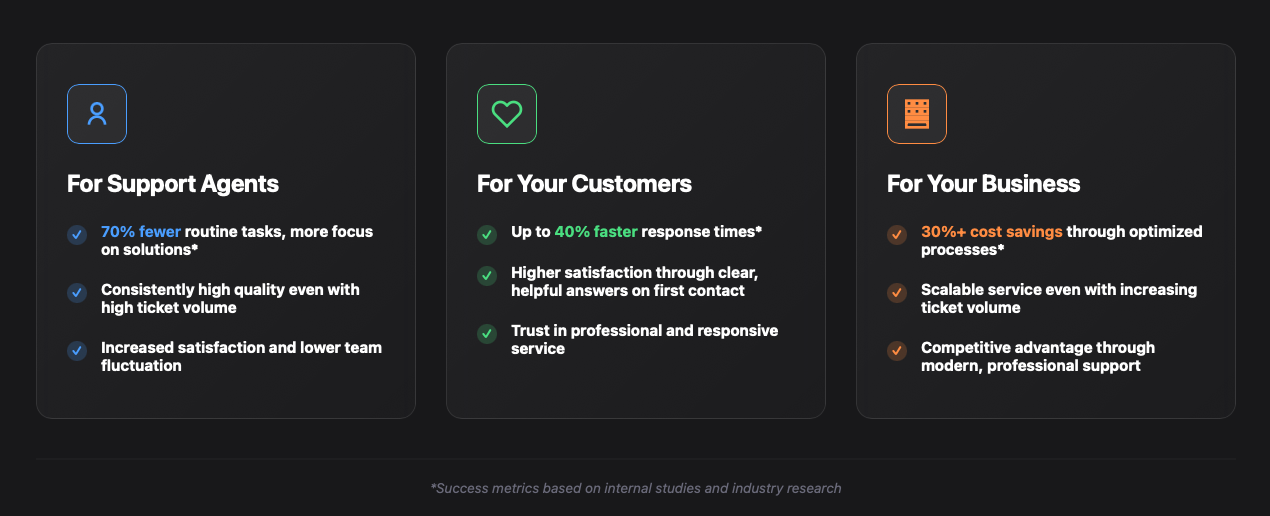

Your Competitive Advantage

Scale your support operations without compromising quality. Turn AI into a strategic advantage that grows with your team.

Sample calculation:

ROI of AI summarization

Realistically, around 45% of all tickets need to be escalated to second-level support or reassigned to another agent. This means those tickets are read at least twice: once during handover and once when they are picked up. The following example illustrates the effort involved, based on an assumed volume of 23,000 tickets per year — with and without AI-powered summaries.

What Guides Our Approach to AI

Your Data. Your Decision.

Whether public cloud, private cloud, SaaS, or on-premises — you decide which LLM you use and how your data is handled. Data protection, exactly as you require it.

Openness by Design

Our AI capabilities are built on the same open-source principles as Zammad itself: transparent, auditable, and open to the community.

Focus on Real Value

We don’t chase hype. We build features that measurably improve your support workflows and deliver tangible value — not just impressive demos.

Serving the Support Agents

No AI with a mind of its own — just intelligent tools that free your team to focus on what truly matters: your customers.

Ready for the EU AI Act

-

Full Sovereignty with Local LLMs

Zammad allows you to connect to Ollama and run powerful language models (such as Llama 3) on your own infrastructure. Not a single word leaves your data center — the most secure way to use AI without third-country data transfers.

-

Aligned with the EU AI Act

The new regulation requires transparency and human oversight. Zammad follows a strict human-in-the-loop approach: AI generates drafts and summaries, but final decisions and approvals always remain with your team.

-

Transparency Instead of a Black Box

As an open-source platform, Zammad provides a transparent and auditable architecture. You always know how your data is processed.

-

Protection Against Vendor Lock-in

Stay independent. If regulatory requirements or security needs change, you can switch your AI model in just a few clicks.

"AI should complement human capabilities, not replace them. At Zammad, we develop intelligent tools that help your team provide better, faster, and more personalized customer service—without sacrificing control over data or transparency."

— Gerrit Daute, Product Owner at Zammad

More on Zammad AI

Putting Zammad’s AI to the Test: Progress, Challenges, and Insights from the Beta

Self-Hosting LLMs: What Companies Need to Know

Digital sovereignty in the era of AI

Frequently asked questions about Zammad AI

When will the AI features be available?

The initial AI features are scheduled for release with Zammad 7.0. This page provides an early look at what’s coming.

What approach does Zammad take regarding data protection and security?

We believe in transparency instead of black-box AI. You decide which Large Language Model (LLM) to use and how it is hosted — in the cloud, on-premises, or via Zammad AI. This gives you full control to meet your data protection and compliance requirements at all times.

Do I have to use a specific AI model?

No. We deliberately avoid vendor lock-in. You are free to choose the model and provider that best fit your requirements.

Which AI providers are supported?

Zammad offers flexible integrations so you can choose the solution that best fits your technical and compliance requirements.

Supported models and providers:

- Zammad AI

- OpenAI

- Ollama

- Anthropic

- Azure AI

- Mistral AI

- Custom OpenAI-compatible models

Are there additional costs for the AI features?

Yes. Costs depend on whether you use Zammad as a SaaS solution or in a self-hosted setup.

Zammad SaaS:

To use the AI features, you need a plan that includes AI functionality. These plans will be available with the release of Zammad 7.0. When using Zammad AI, a fee of €0.03 per AI call applies.

Zammad Self-Hosted:

In a self-hosted environment, the Zammad administrator decides which LLM to connect — either a local model or an external AI provider. Costs depend on the selected infrastructure and the pricing of the respective provider.

Can I disable the AI features?

Yes. All AI features can be enabled or disabled individually. You remain in full control over which capabilities you choose to use.